MTU and VMware NSX-V Overlay Networks

"MTU refers to the maximum size of an IP packet that can be transmitted without fragmentation over a given medium."

-https://en.wikipedia.org/wiki/Maximum_transmission_unit

Over the years, working with customers to resolve MTU issues with NSX overlay networks called attention to my weakness in understanding MTU and its implications. My experiences troubleshooting and experimenting with MTU are the inspiration for this blog post. I’ve needed to strengthen my knowledge on MTU because VXLAN network traffic requires a network to transport data frames with 1600 byte MTU at a minimum. The standard MTU in TCP/IP networking devices is 1500 bytes. This has been the standard since the birth of the Internet, which I'm going to define here as the decade TCP/IP protocol was invented, the mid 1970s. MTU is a well documented network attribute. However, reading documentation about MTU didn’t prepare me to understand the implications of changing MTU and how to troubleshoot MTU problems.

Surly you've been bored to tears with my previous paragraph explaining MTU basics. Trust me, I've been there. Lets get into the lab and start looking at the exciting stuff. I'm going to use my home lab for this post, which is a very modest lab.

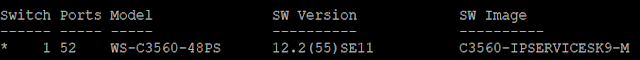

Underlay network:

Cisco 3560 switch

When I was studying for my CCNP routing and switching exam, I purchased a lab to help me pass the exam. Interestingly, my lab gear did not come with any layer 3 switches, so I decided to drop $300 on this layer 3 switch to help me pass the CCNP switching exam. It is a fairly old switch and has mostly FastEthernet ports with a maximum MTU of 1998. Its not quite up to spec with the latest and greatest datacenter switches, but it will do for explaining how MTU impacts overlay networking.

I searched on Google for the command syntax to learn and change the MTU of the switch. Its important you understand how to leverage Google for this since NSX is agnostic of your physical network vendor. Any vendor's network equipment is capable of supporting NSX. For my lab switch:

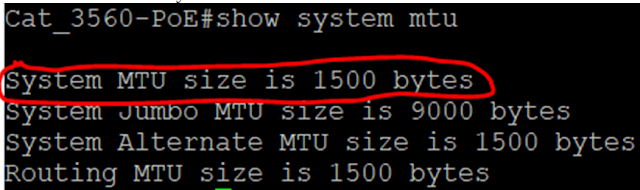

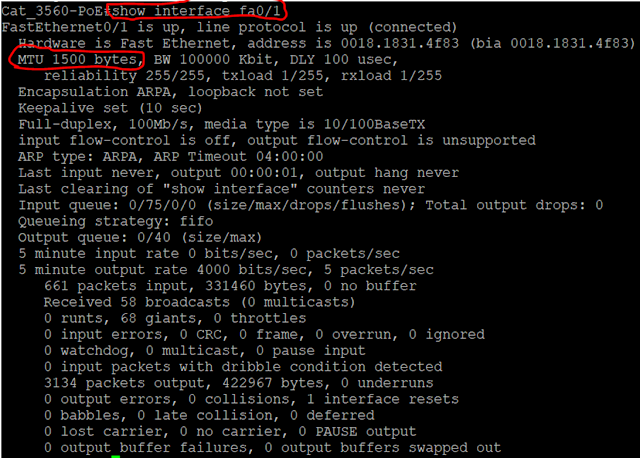

Command: "show system MTU"

Lets experiment with this network in its current state since an MTU of 1500 won't outright stop NSX from working. That is the tricky part about troubleshooting MTU problems. These are my hosts:

First you need to identify your host's VTEP. In the vSphere web UI, this will be represented as a vmk interface on the host. In my case its vmk1. You can tell it is the VTEP because the TCP/IP Stack is vxlan:

VTEP for Host 2:

VTEP for Host 3:

Your primary diagnostic tool here is going to be ping packets sent from the VTEP interfaces to other VTEP interfaces. Most customers use virtual machine interfaces to "test" MTU. That method is more of a nasty discovery than a test.

Host 2 - 172.17.6.2

Host 3 - 172.17.6.3

Host 4 - 172.17.6.4

We need to do a vmkping at the ESXi host cli. You can read about it here:

https://kb.vmware.com/s/article/1003728

vmkping ++netstack=vxlan -I vmk1 -d <destination ip addres> -s <mtu bytes>

Testing host 2 to host 3:

vmkping ++netstack=vxlan -I vmk1 -d 172.17.6.3 -s 1472

vmkping ++netstack=vxlan -I vmk1 -d 172.17.6.3 -s 1500

Clearly we cannot send data at 1500 bytes MTU, so 1600 MTU packets are out of the question. We can send a packet with up to 1472 bytes of data. What this means is if a VM were to send traffic encapsulated in Vxlan, as long as the amount of data in the packet is small enough (i.e. 1472 byte packet or less), NSX VTEPs will encapsulate the packet and send it along. The end user thinks everything is fine. The problem comes when the application VMs are put onto the overlay network and start to stress the network with larger packet sizes. Some packets will be sent, others will be dropped, and without question, NSX will be blamed as a broken product at this point. Lets check if we can send packets between two VMs on the overlay.

Here is where the confusion comes in for most people working with overlay networks. The VM has an MTU setting that is irrelevant to the network underlay. 1600 MTU is only required for the network underlay. The VMs that reside in the overlay should not have their MTU settings changed.

Host 2: VM Peppermint02 -- > Logical Switch VNI 5002

Host 3: VM Windows_Server -- > Logical Switch VNI 5002

Lets send some ping packets between the VMs:

Windows to Peppermint Linux:

Peppermint Linux to Windows:

As you can see, there is no problem sending these packets. So, for the laymen, everything is fine with NSX and with the network, right? This is COMPLETELY WRONG! What happens when we try to send packets with more data inside the packet (i.e. larger MTU sizes)?:

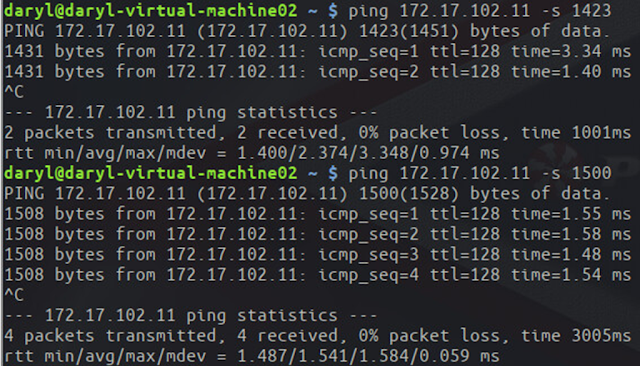

In the overlay (i.e. from the VMs), if we send data with MTU of 1423 bytes, the packets do not arrive at the destination because it is dropped by the underlay network. The packet has the Vxlan header added at the host VTEP and this increases the size of the packet beyond 1500 MTU, thus the switch drops the packet. However, if we lower the MTU by a single byte, 1422 bytes, everything works fine. This can be a nightmare to troubleshoot when an application is using the network because we have no idea what size of packet the application is sending. It will fluctuate with the needs of the application and thus, unpredictable data transfer will result.

Now let's fix the network and abide by the NSX installation requirements of 1600 MTU in the underlay:

Now our MTU is correctly configured. Lets run all of our tests again!

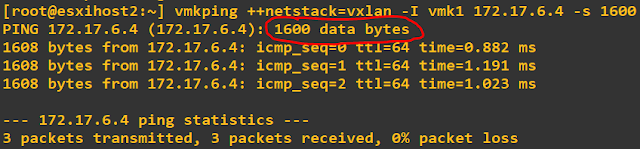

Now lets send data from the host VTEP:

Host 2 -- > Host 3:

Ok, wait a minute, didn't I set my physical switch to 1600 MTU as called out by NSX installation requirements? Then why can't I send a packet of 1600 bytes from the host VTEP? I could not explain it at first. I found a community VMwareblog post mentioning this is a limitation of the -d switch. If we remove it, we can ping with 1600 MTU just fine.

The actual MTU size required by Vxlan is 1554 bytes, but 1600 is a round number that is easy to remember:

Lets increase MTU on my switch to the maximum size supported and send some higher MTU packets:

I'll leave you with this final note. The VMware dvSwitch must also have an MTU of 1600, but the NSX software makes this change for you once its installed to the cluster. The place I see MTU impacting customers the most is with Cross-vCenter NSX. In that architecture, the network overlay spans two or more geographically isolated datacenters. In these cases, you need a private WAN service provider to transport you packets from datacenter to datacenter. Some common technologies for this include MPLS, Metro Ethernet, VPLS, or virtual leased lines. Amazon uses a blanket term for all of these generically. They call it direct connect (DX). Note that direct connect is not an actual WAN technology and the terms is used to make WAN technology comprehensible for the non-technical people. The service provider must also support 1600 MTU since your ESXi hosts will be sending Vxlan packets over the WAN connection between datacenters. Use vmkping to validate your network and the service provider's. Isolate problem devices with trace route. If 1600 MTU packets cannot traverse the network, scrutinize all network hops in the trace route. Every host in the NSX transport zone should be able to send a vxlan packet to every other host in the same transport zone. Have the service provider adjust their networking devices accordingly (or you adjust the components you have administrative control of).

Acronyms:

MTU - Maximum Transmission Unit

Vxlan - Virtual Extensible Local Area Network

TCP/IP - Transmission Control Protocol / Internet Protocol

CCNP - Cisco Certified Network Professional

VTEP - Vxlan Tunnel End Point

WAN - Wide Area Network

MPLS - Multi-Protocol Label Switching

VPLS - Virtual Private Lan Service

Comments

Post a Comment