VXLAN versus GENEVE (NSX-V vs. NSX-T)

August 14th, 2021

With the ramp-up of NSX-T overlay networks and transition away from NSX-V overlay networks, it's a good time to look at one of the fundamental differences between them. NSX-V uses VXLAN as its encapsulation protocol while NSX-T uses the more recent GENEVE encapsulation protocol. Each require the physical networking devices have their MTU adjusted to 1600 bytes or greater. We'll take a detailed look at why that is. First, the basics:

VXLAN is:

- short for Virtual eXtensible Local Area Network

- Is defined in rfc 7348 - https://datatracker.ietf.org/doc/html/rfc7348

- Uses UDP port 4789

- 8 byte header

GENEVE is:

- short for Generic Network Virtualization Encapsulation

- Is defined in rfc 8926 - https://datatracker.ietf.org/doc/html/rfc8926

- Uses UDP port 6081

- 16 byte header

From the prospective of the physical network, an overlay network is essentially an application. NSX-T is an application using well known UDP port 6081. Switching perspectives to the overlay's view of the physical network, you can think of the physical network performing the same role as the switch backplane in a single physical switch. In a physical switch, packets go into the backplane, some logic is applied, and the packet comes out of the correct port. For an overlay, packets go into the overlay, some logic is applied, packets come out of the overlay at the correct location. With NSX-T, your complete datacenter network effectively functions as a single switch backplane!

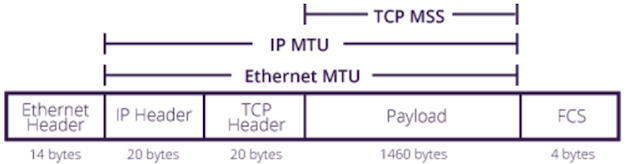

Before we dive into overlay packets and MTU, lets look at how MTU impacts traditional 1500 byte network packets:

20 + 20 + 1460 = 1500

20 + 8 + 1472 = 1500

MTU is measured in bytes and defines the number of bytes that can be carried in an Ethernet frame. When measuring MTU, the Ethernet header and Frame Check Sequence are not included in the calculation. This is why if you packet capture an Ethernet frame which contains the standard limit of 1500 bytes, the actual packet will have 1518 bytes. The Ethernet frame is 14 bytes and the FSC is 4 bytes.

Now let's see why the NSX overlay requires an MTU of 1600 bytes or greater in the physical network:

(click image to see a more detailed view)

Both encapsulation protocols add an outer Ethernet header, IP header, UDP header and VXLAN/GENEVE header. The original Ethernet frame is maintained, but this time since its inside of another Ethernet frame, it is included in the outer frame's MTU calculation. Refer to the image for the math bits. You will notice that VXLAN and GENEVE encapsulated packets are larger than 1500 bytes. They will be dropped by unmodified networking devices. VMware recommends 1600 byte MTU as a safe round number to apply to support NSX. Technically, you could set your MTU to 1560 and GENEVE encapsulation would still be forwarded with no problem. However, you should stick to 1600 MTU or jumbo 9000 MTU frames if your network devices can handle that.

Putting this into the context of a real world scenario, when would this information matter? Let's consider an example from one of my customer interactions. Oracle's RAC (Real Application Cluster) requires a network to support 8922 bytes of payload per packet. Add to that 20 bytes of IP header and 8 bytes of UDP header for 8950 bytes of data and overhead prior to VXLAN encapsulation. VXLAN will add 50 bytes of overhead creating a 9000 byte packet. Even with jumbo frames, this application is sending the largest possible frame that most physical networks can forward. Now migrate your application to NSX-T! Swap the 8 byte VXLAN header for 16 byte GENEVE header and what do you get? You get a broken application attempting to send a 9008 byte packet! In the case of my customer, we decided to lower the VM's interface MTU by 8 bytes. Fortunately we only had to change a handful of interface MTU settings!

Oracle RAC:

https://support.oracle.com/knowledge/Oracle%20Cloud/341788_1.html

Comments

Post a Comment